Figure 1. AI is entering classrooms: students and teachers are supported by digital learning tools. (Image generated using OpenAI’s ChatGPT-5)

- Introduction

Artificial Intelligence (AI) is transforming classrooms, offering personalized learning, real-time feedback, and adaptive pathways for students. But as schools adopt systems like the Pythia Learning Enhancement System, a recent case study highlights that these innovations also carry profound ethical implications [1].

- Background: What is Pythia?

The Pythia system was developed to adapt teaching content dynamically to each learner’s progress. By collecting and analyzing performance data, it suggests strategies tailored to individual needs. The promise is clear: higher engagement, improved outcomes, and more inclusive learning environments.

However, the study stresses that such benefits cannot be separated from the responsibilities of using AI in education. Pythia serves as an example of both opportunity and caution, demonstrating how technology can improve learning while exposing risks if ethical safeguards are overlooked.

- Key Ethical Challenges

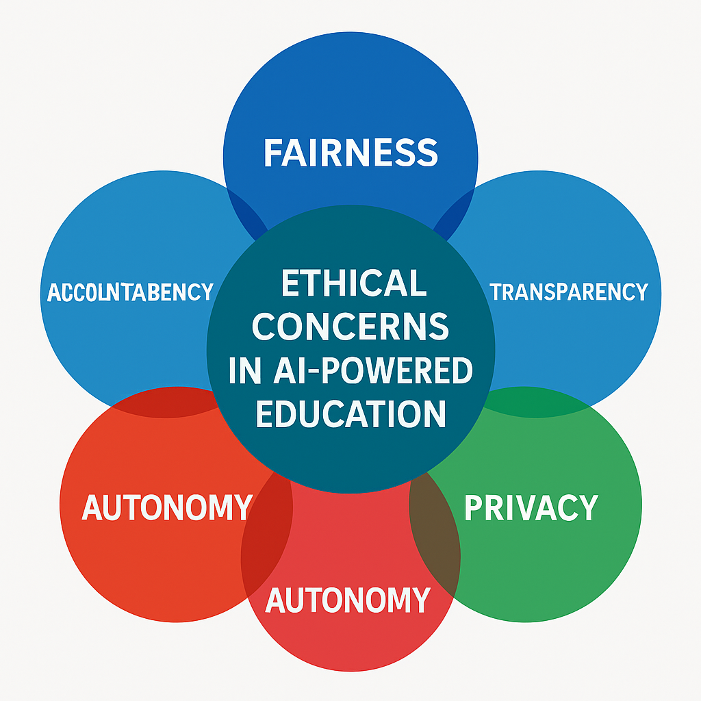

As illustrated in the Figure2, the study identifies five central ethical concerns: Fairness, Transparency, Privacy, Autonomy, and Accountability [2].

Figure 2. The five main ethical challenges identified in the Pythia study: Fairness, Transparency, Privacy, Autonomy, and Accountability (Image generated using OpenAI’s ChatGPT-5)

- Fairness & Bias

AI systems are only as fair as the data that trains them. Skewed datasets may reinforce inequalities, leaving already disadvantaged students further behind [2].

- Transparency & Explainability

For many teachers and parents, how Pythia reaches its conclusions remains a mystery. Without clear explanations, trust in AI recommendations weakens.

- Privacy & Data Protection

Pythia continuously collects learner data to optimize results. This raises concerns about how securely sensitive information is stored and whether students’ autonomy is respected.

- Teacher & Student Autonomy

While AI offers helpful guidance, overreliance risks undermining teachers’ professional judgment and limiting students’ ability to think critically and independently.

- Accountability & Oversight

When an AI system makes a mistake—misclassifying a student’s ability or recommending harmful interventions—who is ultimately responsible?

- Recommendations from the Study

The authors argue that AI should be a supporting tool, not a replacement for human educators. To ensure ethical deployment, they propose:

o Embedding human oversight in all AI-driven decisions.

o Establishing clear frameworks for fairness, transparency, and accountability [3].

o Strengthening data governance policies to protect learners’ privacy.

Maintaining open communication with students, parents, and educators about how systems like Pythia function.

- Implications for the Future

The case study emphasizes that the conversation around AI in education must go beyond efficiency and outcomes. Ethical principles—fairness, dignity, and trust—must guide development and implementation.

If designed and used responsibly, AI can help democratize access to quality education. But without safeguards, it risks eroding trust in both technology and educational institutions. International bodies such as the OECD stress that AI in education brings both opportunities and risks [4].

- Conclusion

The balance between technological innovation and ethical responsibility remains at the heart of the debate (see Figure 3).

Figure 3. Balancing technological innovation with ethical responsibility is key to the future of AI in education. (Image generated using OpenAI’s ChatGPT-5)

As the study notes, “AI in education is not just a technical challenge—it is a moral one.” Moving forward, collaboration between policymakers, technologists, and educators will be crucial to ensure that systems like Pythia support learners while upholding the values that education is built upon.

- References

[1] S. Röhrl et al., “Ethical Considerations of AI in Education: A Case Study Based on Pythia Learning Enhancement System,” in IEEE Access, vol. 13, pp. 115331-115353, 2025, doi: 10.1109/ACCESS.2025.3583975. https://ieeexplore.ieee.org/document/11053800 [Accessed 15/09/2025].

[2] UNESCO (2021). AI and Education: Guidance for Policymakers. Paris: UNESCO. https://unesdoc.unesco.org/ark:/48223/pf0000376709 [Accessed 15/09/2025].

[3] European Commission (2019). Ethics Guidelines for Trustworthy AI. Brussels: European Union. https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai [Accessed 15/09/2025].

[4] OECD (2021). AI in Education: Challenges and Opportunities. Paris: OECD Publishing. https://www.oecd.org/en/about/directorates/directorate-for-education-and-skills.html [Accessed 15/09/2025].